Throughout history, the definition of a “computer” has evolved significantly. Before the era of modern electronics, the word “computer” referred to a person who performed mathematical calculations, often by hand. But the question of who truly invented and designed the world’s first computer—if defined as a device that can perform automated calculations based on input and programmable instructions—requires delving into the origins of computing machinery in the 19th and 20th centuries. This article explores the major figures associated with that groundbreaking innovation, focusing particularly on Charles Babbage and the development of early mechanical computing devices.

The Conceptual Birth of the Computer: Charles Babbage

When discussing the first computer, many historians and computer scientists turn to the English mathematician and engineer Charles Babbage (1791-1871). Often hailed as the “Father of the Computer,” Babbage designed mechanical devices that laid the conceptual foundation for modern computing.

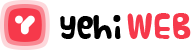

In the early 1820s, Babbage began work on a machine he called the Differential Engine. This invention was intended to automate the process of computing and printing mathematical tables, such as logarithmic and trigonometric tables. The machine used the method of finite differences to perform polynomial calculations without the need for division or multiplication.

Babbage’s Differential Engine was never fully completed in his lifetime due to factors such as lack of funding and the technological limitations of the era. However, this project laid the groundwork for what would become his most ambitious design: the Analytical Engine.

The Analytical Engine: The First General-Purpose Computer

The Analytical Engine, conceived in 1837, is widely regarded by historians as the first conceptual design of a general-purpose computer. Unlike Babbage’s earlier machine, the Analytical Engine was not restricted to solving a specific set of calculations. Instead, it could be programmed using punched cards and could execute any mathematical operation or algorithm represented through a series of instructions.

Babbage envisioned the Analytical Engine to have several components that resemble modern computers:

- The ‘Store’: Equivalent to today’s memory. It was designed to hold data and intermediate results.

- The ‘Mill’: Functioned similarly to the modern CPU, processing and performing operations.

- Punched Cards: Used to input data and instructions, inspired by the Jacquard loom system for textile manufacturing.

- A printer and output mechanism: For displaying the results.

Despite its brilliant architecture, the Analytical Engine was never built due to financial obstacles, lack of sufficiently precise mechanical parts, and Babbage’s shifting focus between multiple projects. Still, the detailed plans and the philosophical importance of the device cement its place as the first true design for a programmable computer.

Ada Lovelace: The First Computer Programmer

While Babbage was responsible for the hardware and conceptual design of the Analytical Engine, his close collaborator Ada Lovelace (1815–1852) played a pivotal role in suggesting how it could be used creatively. An English mathematician and writer, Lovelace translated and annotated an Italian mathematician’s paper on the Analytical Engine, expanding it with her own extensive notes.

In her notes, she introduced what’s now considered the first computer algorithm: a method for computing Bernoulli numbers using the Analytical Engine. This makes her the world’s first computer programmer.

Lovelace also realized the broader implications of the Analytical Engine. She wrote that it could potentially manipulate not only numbers but also symbols, music, and texts, provided the logic was translatable into operations. This visionary perspective predates modern ideas of general-purpose computers as multimedia and symbolic processors.

The Forgotten Legacy and Rediscovery

Despite the genius of Babbage’s designs, they were largely forgotten in the decades following his death. Rapid development in electrical and electronic technologies over the 20th century took new directions, often without direct awareness of Babbage’s pioneering work.

It wasn’t until the late 20th century that computer historians began to appreciate the full extent of Babbage’s contribution. In 1991, to celebrate the bicentennial of Babbage’s birth, the London Science Museum constructed a working model of the Difference Engine No. 2 using Babbage’s original specifications. The machine worked flawlessly—demonstrating the correctness of Babbage’s designs and underscoring that the limitations had been technological, not conceptual.

Electromechanical and Electronic Computers: The Next Wave

The first true working computers were developed more than a century after Babbage. Some notable developments include:

- Konrad Zuse in Germany built the Z3, the world’s first electromechanical programmable computer, in 1941.

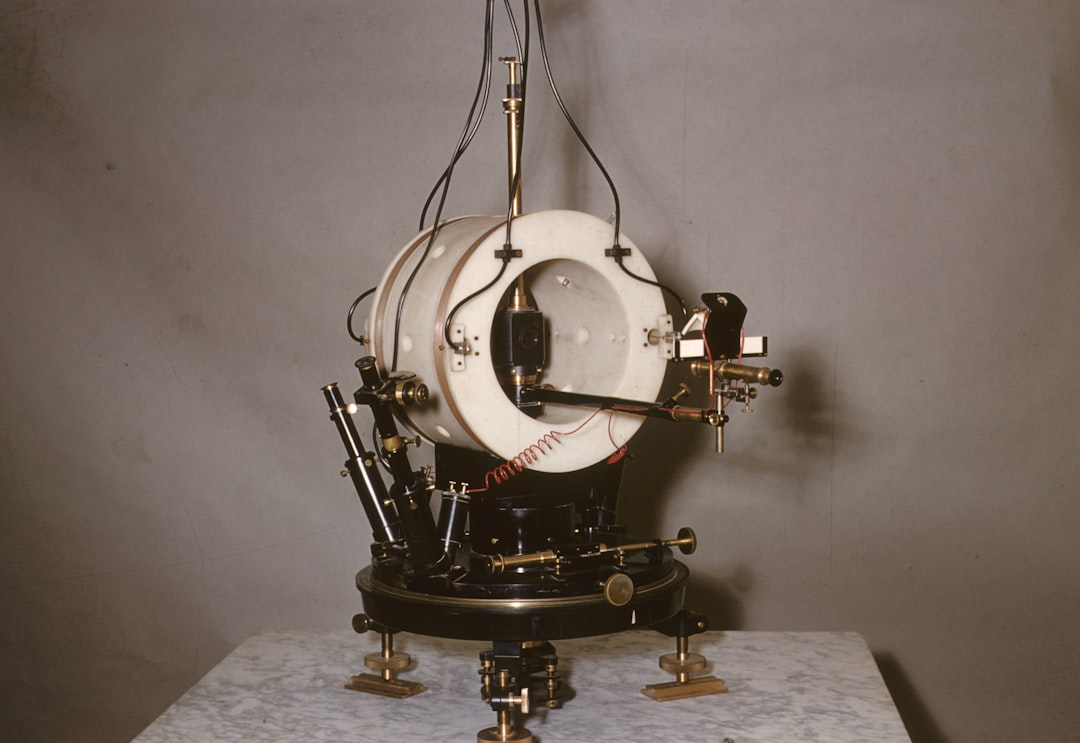

- Alan Turing conceptualized the Turing Machine and contributed to the development of the electromechanical Bombe, which helped crack the Enigma code during World War II.

- John Atanasoff and Clifford Berry created the Atanasoff–Berry Computer (ABC) in the United States, often credited as the first electronic digital computer.

- ENIAC (Electronic Numerical Integrator and Computer), developed by John Mauchly and J. Presper Eckert, became operational in 1945 and marked a turning point in practical electronic computing.

While these machines were crucial in defining what we now know as modern computers, they were built on a conceptual platform first imagined by Babbage and Lovelace a century earlier.

Why Charles Babbage Is Still Considered the Inventor of the First Computer

Though not a single piece of functional hardware emerged from his workshop in his lifetime, Charles Babbage’s designs meet all the criteria of a general-purpose computer:

- They could be programmed.

- They had components for processing, memory storage, and input/output.

- The execution of instructions could follow loops and conditional logic, a fundamental feature of modern computing.

The scope and insight of his work transcended what was possible in his era, earning him the recognition as the intellectual architect of digital computing.

Conclusion

The invention and design of the world’s first computer cannot be credited to a single device or moment, but rather must be viewed as an evolving legacy of intellectual milestones. Charles Babbage and Ada Lovelace were visionaries who conceived the theoretical framework that underpins contemporary computer science. Though they lacked the technology to realize their ideas fully, their designs aligned so closely with modern computing architecture that they remain a cornerstone in any discussion about the origins of computers.

Today’s computers have come a long way from gears and cranks, but they still follow many of the principles Babbage and Lovelace formulated nearly two centuries ago. Understanding this legacy not only honors their contributions but also provides a deeper appreciation for how far we’ve come—and how their vision continues to shape the future of technology.

yehiweb

Related posts

New Articles

The Future of SEO Report Template Excel

In the ever-evolving digital landscape, Search Engine Optimization (SEO) remains a cornerstone of online success. But as algorithms grow smarter…